In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. Jose Nicholas Francisco Published on 082323 Updated on 101123 Llama 1 vs Metas Genius Breakthrough in AI Architecture Research Paper Breakdown First. 6 min read Oct 8 2023 Llama 2 is a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. In this work we develop and release Llama 2 a family of pretrained and fine-tuned LLMs Llama 2 and Llama 2-Chat at scales up to 70B parameters On the series of helpfulness and safety..

The Llama2 models follow a specific template when prompting it in a chat style including using tags like INST etc In a particular structure more details here. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 13B fine-tuned. Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets Send me a message or upload an. Whats the prompt template best practice for prompting the Llama 2 chat models Note that this only applies to the llama 2 chat models The base models have no prompt structure. In this post were going to cover everything Ive learned while exploring Llama 2 including how to format chat prompts when to use which Llama variant when to use ChatGPT..

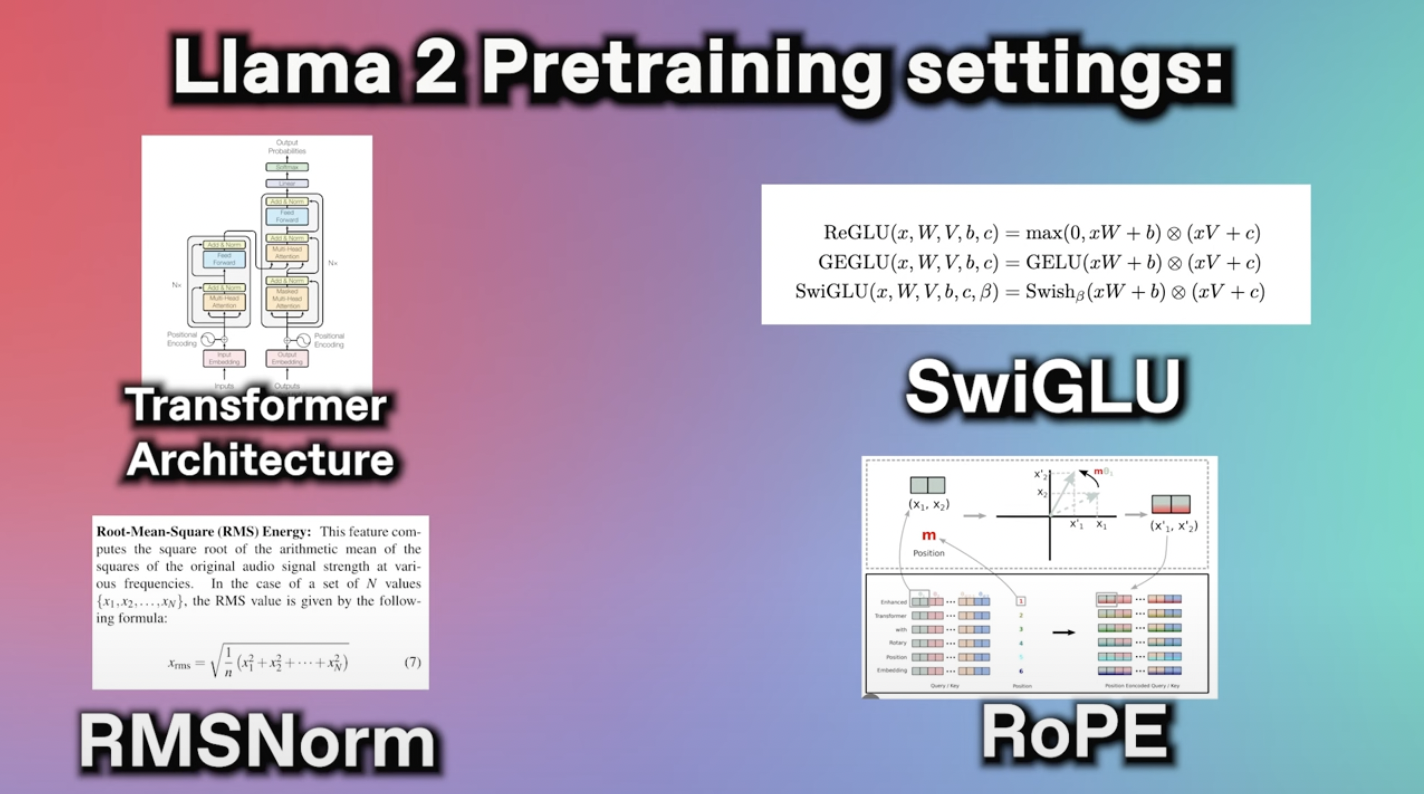

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Published on 082323 Updated on 101123 Llama 1 vs Metas Genius Breakthrough in AI Architecture Research Paper Breakdown First things first. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The architecture is very similar to the first Llama with the addition of Grouped Query Attention GQA following this paper Setting configpretraining_tp to a value different than 1 will activate the..

What is the maximum token limit of llama Is it 1024 2048 4096 or longer For example GPT-4 has a maximum token limit of 32000. All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have double the context length of Llama 1. Llama 1 released 7 13 33 and 65 billion parameters while Llama 2 has7 13 and 70 billion parameters Llama 2 was trained on 40 more data. I thought Llama2s maximum context length was 4096 tokens When I went to perform an inference through this model I saw that. The native context length for Llama 1 and 2 are 2024 and 4096 tokens You should NOT use a..

Comments